Ansible Builder and Execution Environments

With the introduction of the Ansible Automation Platform 2, we now have access to something called execution environments. We can replace Python virtual environments with container-based ones using these execution environments.

We can decouple our playbook dependencies from the Ansible Controller ( previously tower ) with execution environments, allowing better portability and scale. So how do we work with these execution environments?

Out of the Box

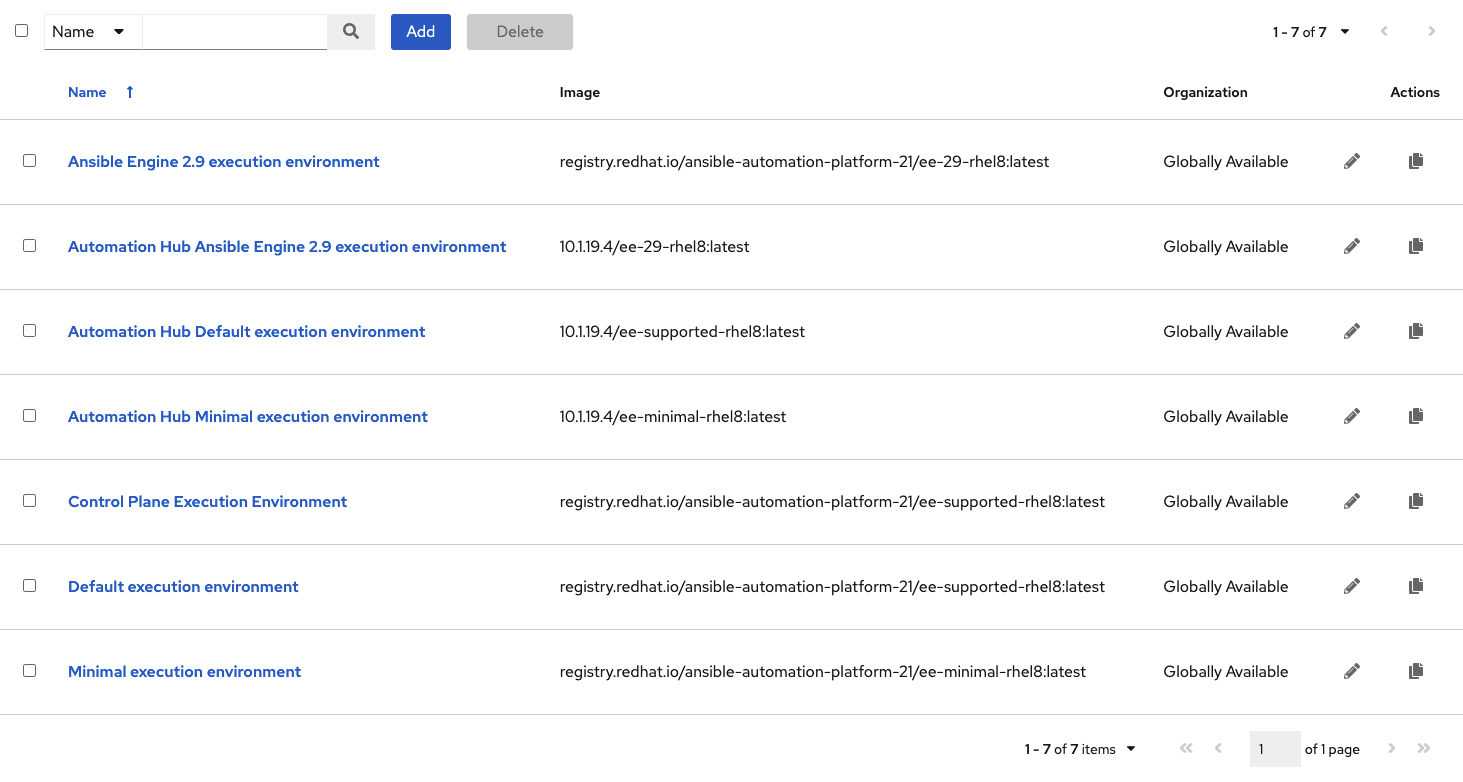

After a fresh installation of the Automation Controller, when you navigate to Administration > Execution Environments, you will see a list of images already used by the system.

Out of the list of environments on this screen, you will see a commonality of three currently released Red Hat images:

| Name | Image |

|---|---|

| Compatability Environment | ansible-automation-platform-21/ee-29-rhel8 |

| Supported Environment | ansible-automation-platform-21/ee-supported-rhel8 |

| Minimal Environment | ansible-automation-platform-21/ee-minimal-rhel8 |

ee-29-rhel8 contains ansible 2.9 for compatibility with playbooks and content written for previous versions of the Ansible Automation Platform.

As we advance with this post, I want to focus on the following two images since I will be using the current release of the Ansible Automation Platform:

- ee-supported-rhel8

- ee-minimal-rhel8

Supported Environment

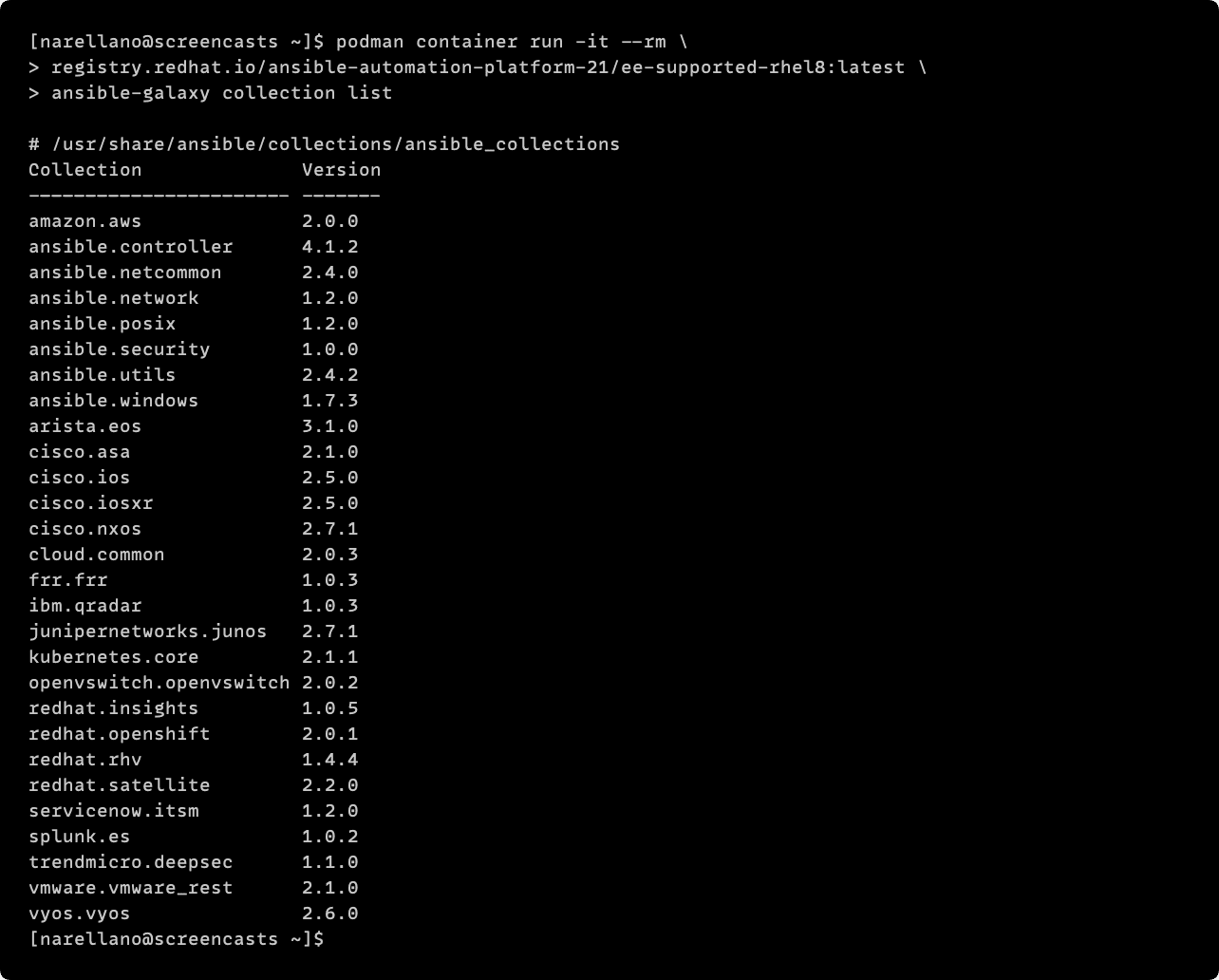

ee-supported contains ansible-core 2.12 and automation content collections supported by Red Hat.

This is the description from the actual catalog item that you can find here: Supported Execution Environment. So what does Red Hat mean by content collections supported by Red Hat? I pulled the image from registry.redhat.io and ran the command ansible-galaxy collections list to showcase this.

Minimal Environment

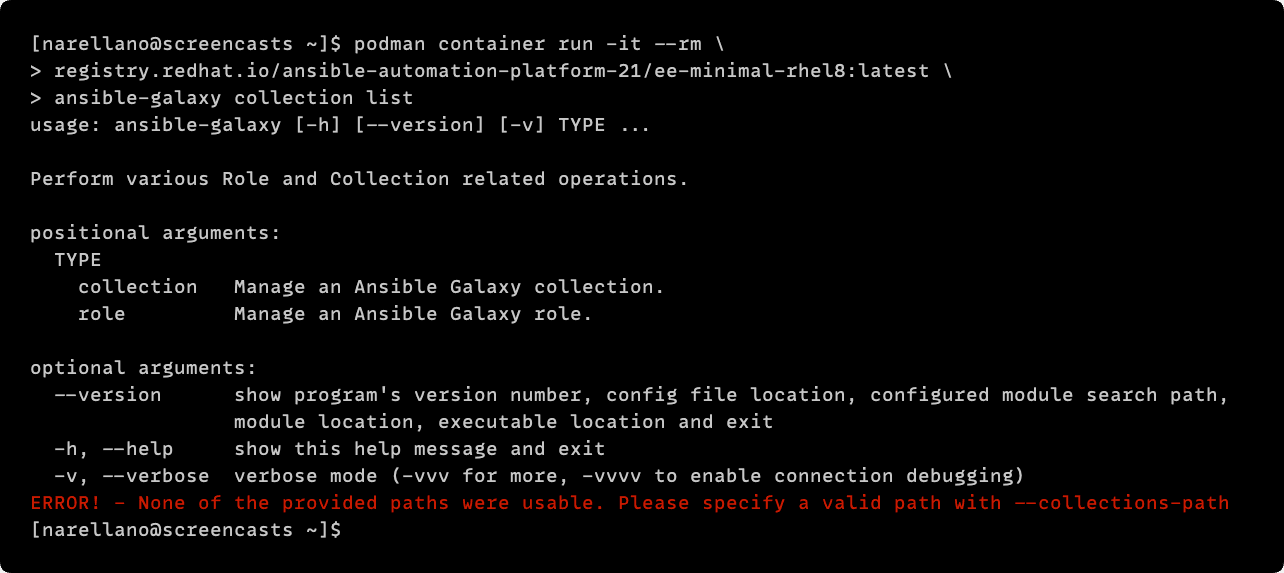

ee-minimal contains ansible-core 2.12.

This is the description from the actual catalog item that you can find here: Minimal Execution Environment. When they said contains just ansible-core 2.12, they meant it. To showcase this, I pulled the image from registry.redhat.io and ran the command ansible-galaxy collections list.

You can see from the error in the console that zero collections are present in the environment!

When looking at what is available from both of those supported execution environments, you might be asking how am I supposed to use other content with the Ansible Automation Platform?

Building a Custom Image

When we need to provide different collections, modules, or custom code for our execution environments, we need to look at ansible-builder. Ansible Builder is a tool available for us to aid in the creation of Ansible Execution Environments.

To understand how this all works, I will walk you through building an execution environment for a networking operating system called RouterOS. I have a GitHub repository that contains everything we need to follow along with the post.

Preparing Our Workshop

To set up our environment to work with ansible-builder, we have to make sure a few packages are installed on our system to have everything we need to follow along. I am running these commands in a standard server installation of RHEL8.

# git & make - used for the GitHub repository

# pip3 - required for installing ansible-builder

sudo dnf install python38-pip git make

# Install ansible-builder

pip3 install --user ansible-builder

# Login is required to access registry.redhat.io

podman login registry.redhat.io

# Username: *****

# Password: *****

# Login Succeded!

With our system dependencies successfully installed, we need to pull in the GitHub repository.

# Using the home directory in this example

cd ~

# Get a local copy of the repository

git clone https://github.com/nickarellano/ansible-execution-environments

# Change directories into the repository

cd ansible-execution-environments

The overall repository looks like what is pictured below.

# Filesystem

~/ansible-execution-environments/

├─ inventory/

│ ├─ hosts

├─ project/

│ ├─ mikrotik.yml

├─ Makefile

├─ collections.yml

├─ execution-environment.yml

The only things that we need to worry about at this point are two files:

- execution-environment.yml

- collections.yml

I created two similar files below to show that those two files in this example are minimum requirements. The execution-environment.yml has been adjusted to use the build_arg_defaults to pass in the EE_BASE_IMAGE. This adjustment will allow us to build an example execution environment without the rest of the repository details getting in the way.

# Filesystem

~/example/

├─ collections.yml

├─ execution-environment.yml

# execution-environment.yml

---

version: 1.0.0

build_arg_defaults:

EE_BASE_IMAGE: 'registry.redhat.io/ansible-automation-platform-21/ee-minimal-rhel8'

dependencies:

galaxy: collections.yml

# collections.yml

---

collections:

- name: community.routeros

version: 2.0.0

- name: ansible.netcommon

version: 2.4.0

- name: ansible.utils

version: 2.4.2

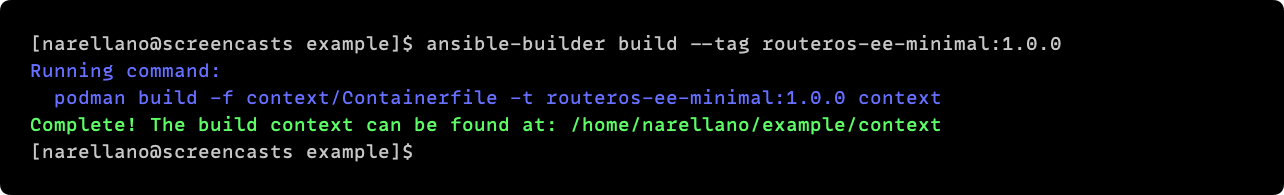

If you copy the contents to your filesystem and run the following command, you should get the next image in the terminal.

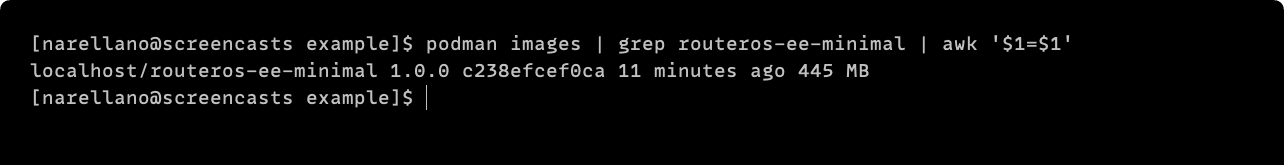

Ansible Builder knows to look by default for a file called execution-environment.yml, so without any extra parameters, everything is built successfully! We can verify this image is available via the command podman images.

Contents of the Repository

In the above example, I used ansible-builder to build a new image using the two files I copied into an example directory. So what is different about the repository?

To simplify processes, I added a Makefile with the following contents:

# [ supported, minimal ]

BASE_VERSION ?= minimal

CONTAINER_NAME ?= routeros-ee-$(BASE_VERSION)

CONTAINER_TAG ?= 1.0.0

.PHONY: lint

lint: # Lint the repository with yaml-lint

yaml-lint .

.PHONY: build

build: # Build the execution environment image

ansible-builder build \

--build-arg EE_BASE_IMAGE=registry.redhat.io/ansible-automation-platform-21/ee-$(BASE_VERSION)-rhel8 \

--tag $(CONTAINER_NAME):$(CONTAINER_TAG) \

--container-runtime podman

.PHONY: run

run: # Run the example playbook in the execution environment

ansible-runner run \

--container-image localhost/$(CONTAINER_NAME):$(CONTAINER_TAG) \

--process-isolation \

-p mikrotik.yml .

.PHONY: list

list: # List all of the installed collections

podman container run -it --rm \

localhost/$(CONTAINER_NAME):$(CONTAINER_TAG) \

ansible-galaxy collection list

.PHONY: shell

shell: # Run an interactive shell in the execution environment

podman run -it --rm \

localhost/$(CONTAINER_NAME):$(CONTAINER_TAG) \

/bin/bash

The contents might be overwhelming, but if you look through each of the different commands, ultimately, I am just passing variables into the various ansible commands needed to build and test the execution environment. You may have noticed I have ansible-runner available under the make run command. After creating two test images using the repository, we will discuss that.

Building Two Test Images

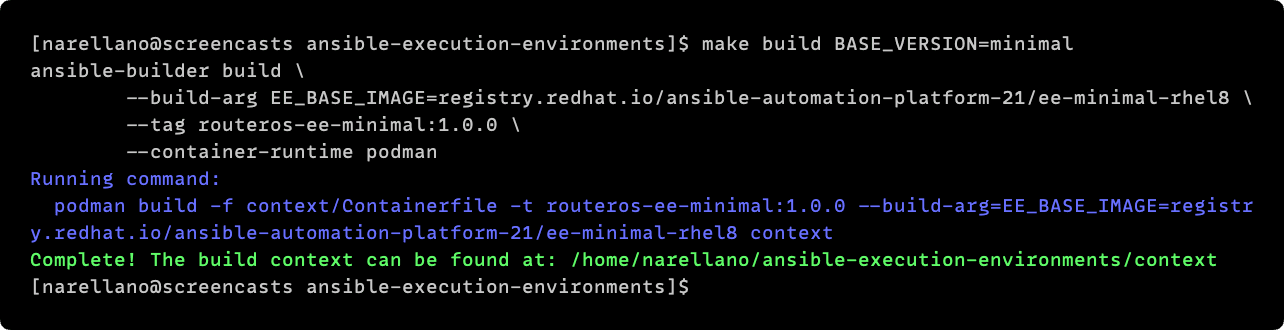

One thing I wanted to see for myself was how the two different Red Hat provided images ( minimal and supported ) would work using the same playbook. We can build a fresh minimal image and supported image using the make file. We can first run the command make build to do so.

By default, the Makefile will use the minimal image and reference minimal in the other commands, but I'm going to reference the version explicitly in the screenshot. The flexibility of the Makefile will allow me to work with different environments just by changing variables in the command line.

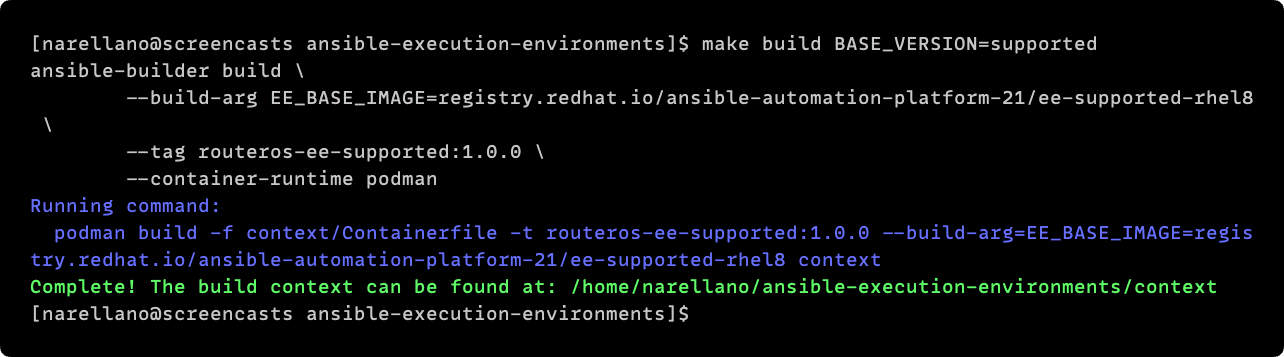

In the second build command, you can see that I set the BASE_VERSION to supported.

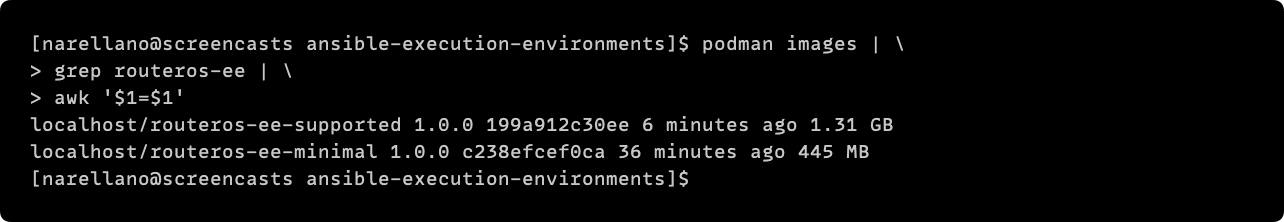

Now that we have both images built let's look at what we have under podman images. You will notice at first glance the size difference between the two execution environments. Our minimal environment is only 445 MB whereas our supported version is 1.31 GB!

For some, it may not be worth it to dig into the dependencies, but there are some benefits we get by using the minimal base environment:

- ~66% less storage utilization ( think scale )

- Faster pulls to execution nodes

- Faster pushes during iteration

When looking into execution environments, I hit the point where I had difficulty finding more information on how to test these environments. I wanted a way to test these environments before deploying them into my Private Automation Hub and, ultimately, Ansible Controller.

After some digging, I stumbled upon some information in the documentation for ansible-runner. Ansible Runner has an option to run your playbook files through an execution environment!

Time to Run

To start using ansible-runner, we will need to install it just like previously with ansible-builder.

# Install ansible-runner

pip3 install --user ansible-runner

Ansible Builder is going to look for the following:

- inventory directory for your hosts file

- project directory for your playbooks

We already have this set up in a snippet of our repository filesystem:

# Filesystem

├─ inventory/

│ ├─ hosts

├─ project/

│ ├─ mikrotik.yml

Hosts File

I have one test host available in my network in the hosts file and the parameters I will need for the playbook, such as the connection type information and credentials.

# ./inventory/hosts

[routeros]

192.168.0.1

[routeros:vars]

ansible_connection=network_cli

ansible_network_os=routeros

ansible_user=testing

ansible_ssh_pass=testing

The Playbook

I wanted a straightforward Hello World! type of example to just test with to make sure the playbooks can execute without any dependency errors.

# ./project/mikrotik.yml

---

- name: 'Get the system identity of MikroTik devices'

gather_facts: no

hosts: routeros

tasks:

- name: 'Register the system identity in a variable'

community.routeros.command:

commands:

- ':put [ /system identity get name ];'

register: system_identity

- name: 'Print the system_identity variable in a debug message'

when: system_identity is defined

ansible.builtin.debug:

msg: '{{ system_identity.stdout }}'

Minimal Environment Test

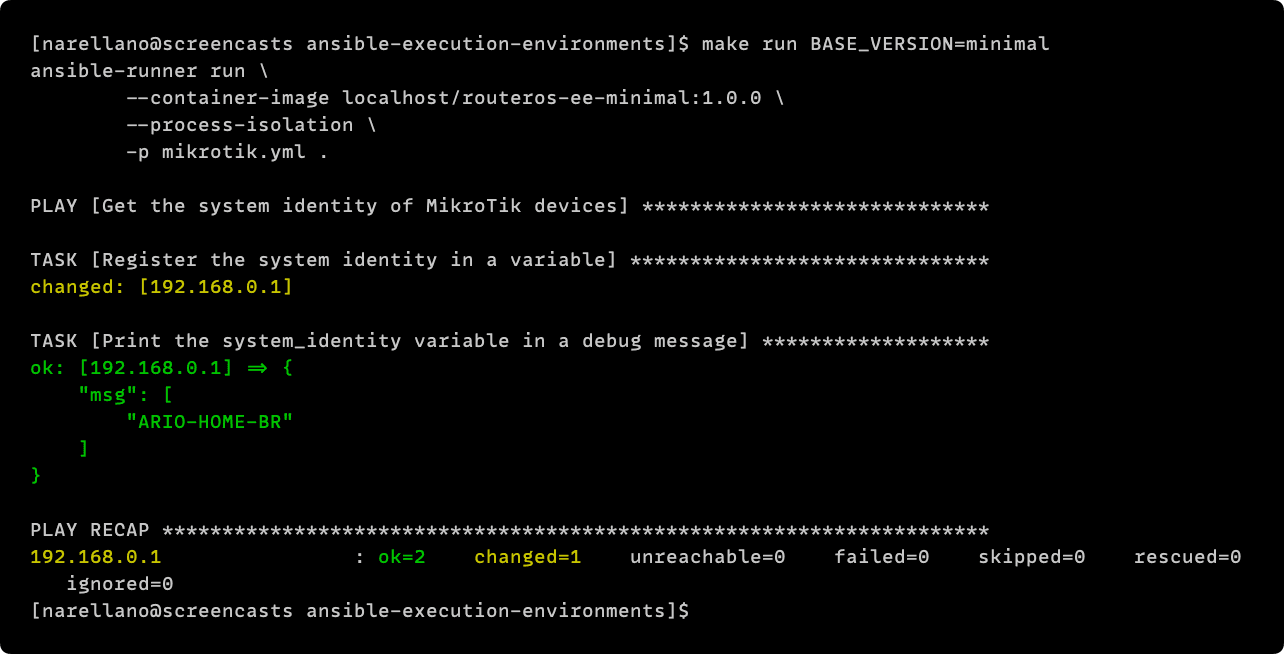

The Makefile can quickly test the minimal environment by running the make run command. This command will spin up the minimal execution environment by default that we built with the make build command. I am going to pass in the version as we did previously explicitly, and we will get the following output:

Supported Environment Test

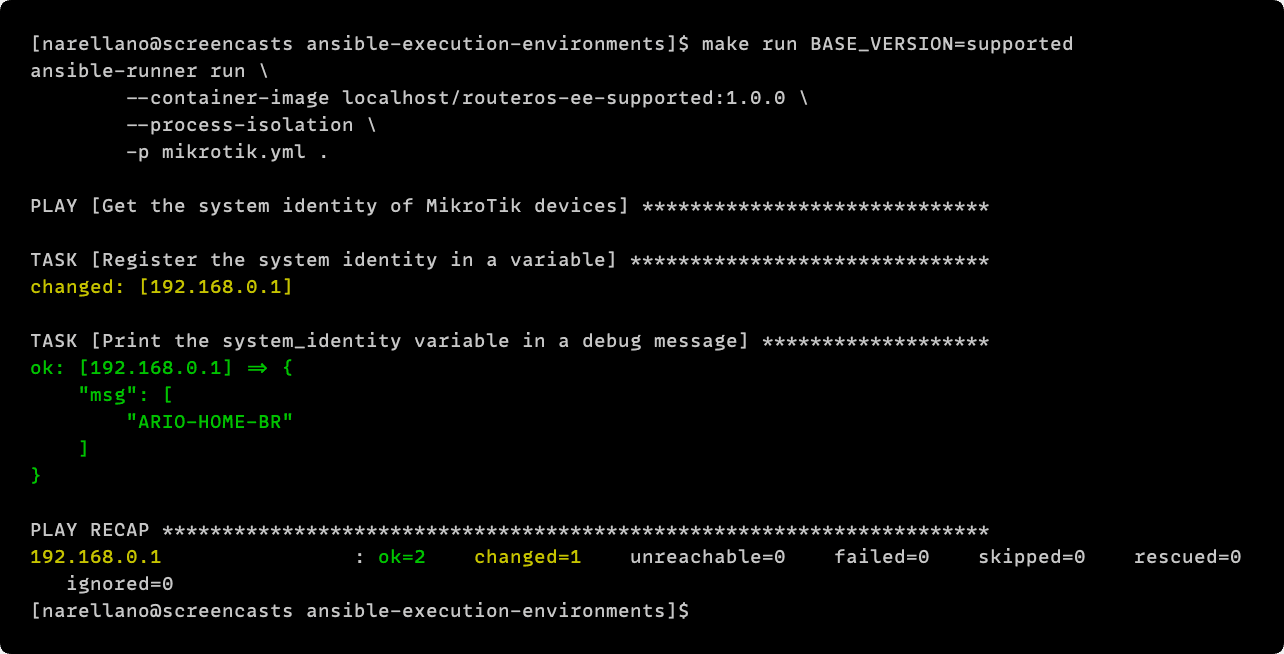

Since we can run the playbook without errors, we know that our environment is fully functional! We can now test the supported environment by passing the appropriate BASE_VERSION.

Closing

Hopefully, by making it to the end of this post, I was able to answer some questions you may have had about what execution environments are as part of the new Ansible Automation Platform.

Using this process, you should be able to make your minimal execution environments and test them with ansible-runner before you push it into your Ansible Automation ecosystem.